OpenAI’s new ChatGPT-4.5: Expensive and Underwhelming.

This new model is telling us something important about the near future of Artificial Intelligence

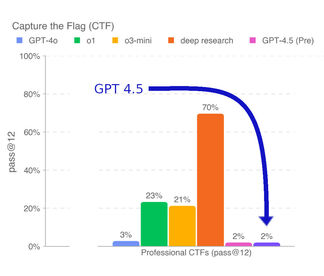

The cost of AI models has been plummeting recently, yet OpenAI just launched GPT-4.5, a frontier model that is up to 1,000 times more expensive than alternatives from DeepSeek, Mistral, and Google. GPT-4.5 is 68 times more expensive than even OpenAI's own o3-mini while often performing worse according to OpenAI's own report.

To put this into perspective, a website running Business Landings AI-Search or ChatBar AI might have a AI cost ("token cost") of around €10/month when using Mistral's small AI model or Google's Gemini model. This would increase to around €300 when using Anthropic's top of the range Claude Sonnet model. But if you instead now use GTP-4.5 it would cost a stunning €7,500/month.

So what’s going on?

It appears that GPT-4.5 was originally meant to be ChatGPT-5 but didn’t meet expectations. Instead, OpenAI released it under a different name. OpenAI CEO Sam Altman himself has called it "a giant, expensive model", and it appears to signal that OpenAI have hit the practical limits of scaling up AI in this way.

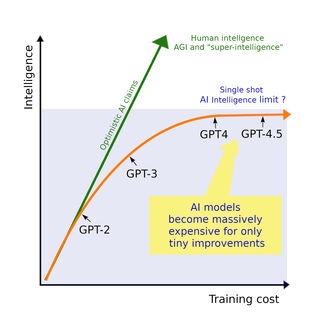

Figure 2 shows this graphically. The GPT-type of AI has met a limit. Improvements to this sort of quick question and answer ("single shot") AI chat is not improved by adding more expensive training and data. It is also worth noting that this is further evidence that AI models are not like humans, and do not look like that will attain general human-like intelligence any time soon.

Artificial Intelligence progress by brute force may now be a lot slower than most in the industry had predicted.

💡 Why would OpenAI release such a model?

- Testing the limits – AI research (including OpenAI’s own research into the scaling laws and the "entropy of natural language") has suggested diminishing returns from ever-larger models. Someone had to test the boundaries, and OpenAI did just that.

- GTP-4.5 has more knowledge coverage – Simply due to its sheer size, it has a broad knowledge base.

- Targeting "vibe", "feeling" & personality – Anthropic’s Claude has long been seen as the leader in producing text with personality and tone. OpenAI may be positioning GPT-4.5 as an alternative. But at 25 times the cost of Claude, it remains a tough sell.

- Niche strengths - While poor at maths and programming, GPT-4.5 was found to perform well at, for example, Persuasion. It could extract fictional money out of other AI models 💰.

- A stepping stone for future models – OpenAI may be using GPT-4.5 to refine techniques for its next generation of models.

The big takeaways

- AI LLMs (Large Language Models) of this kind may have reached their practical limits for now – The idea of infinitely scaling up AI models is, as expected, running into diminishing returns while the costs edge into the billions of dollars range. Expect the hype around AGI (Artificial General Intelligence) or ASI ("super-intelligence") to cool down.

- AI models are becoming a commodity – With cheaper, competitive alternatives emerging, the business case for massive AI investments is getting hard to justify.

The price/performance of Chat GPT-4.5 suggests that we are at the end of this type of AI model development (none reasoning LLMs). AI progress will now be a lot slower than most in the industry hype had claimed. This may in fact be good, as we may now need to make more out of the cheaper models that we already have.

🤔 How can OpenAI GPTs be getting more expensive while Mistral & DeepSeek get cheaper?

It might seem contradictory that open-source AI vendors like DeepSeek and Mistral have recently developed models that rival OpenAI GTP's in capability, yet at a fraction of the cost.

There are several reasons for this. One key factor is that DeepSeek appears to have efficiently re-used existing research and advancements made by others 🔄, as well as adding a few clever tweaks of it's own. However, if DeepSeek and Mistral attempt to develop entirely new, massive frontier AI models, they will likely face the same cost and scaling challenges as OpenAI